The whole point of user research is that you get to observe real members of your target user group interacting with your product. However, the cash incentive that you offer – typically £50 for an hour – is compelling enough to make some people bend the truth, and this is compounded by the chain of people involved in the recruitment. For example, if you outsource a research project to a UX consultancy, they will probably outsource the recruitment to a specialist agency, who in turn will may outsource to a number of independent freelancers. As the client sitting on the receiving end, you have to be confident that it’s being carried out in a rigorous way.

Even if your recruitment agency are trying their best, it’s sad reality that there’s diminishing returns in weeding out end users who fib. They can’t really hire in Columbo to investigate every user. And if, during the sessions, the research facilitator starts to suspect the participant might be a dud, what can they do? It’s an awkward situation, especially if they their client watching from behind the two-way mirror. The researcher can continue the interview without pushing the issue, or they can deviate from the script and start cross-questioning the participant on their honesty, which will ruin the rapport, take time, and probably won’t be effective in any case.

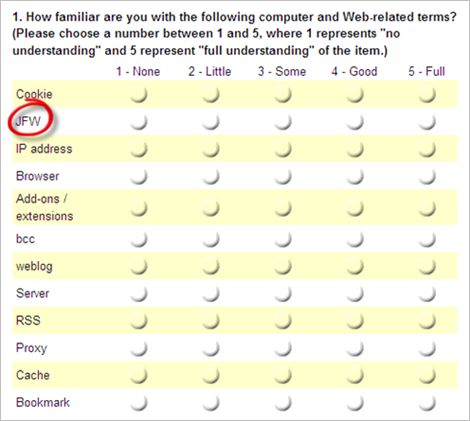

In fact, a lot of liars can be screened out by writing a really good screener questionnaire. For example, here’s a decoy question that the Mozilla metrics team used in their recent Test Pilot survey.

The goal of the question above was to ascertain the experience level of a respondent, so the data could be segmented. To sift out the deluded novices and liars, the Mozilla Metrics team added a made-up acronym – JFW – on the rationale that anyone who ticks “full understanding” for this item and all the others can be flagged as a suspect respondent.

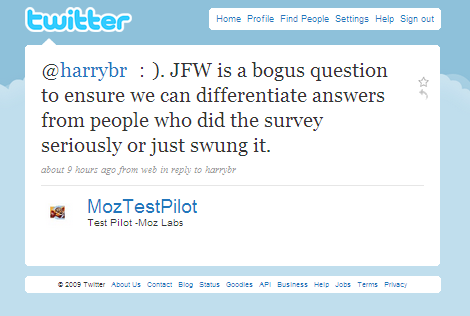

Don’t believe me on the JFW acronym? I asked the Moz Labs team, just to be sure:

It’s also fairly likely you will want to recruit participants who have used your product a certain number of times. If you ask them directly (“Have you used mysite.com at least 3 times in the past month?”), the respondent will easily guess what they are meant to say to “win” the research. So, you should always hide the qualifying answer among a number of decoy questions, or by asking open questions.

Another trick I’ve recently started using is placing a stern warning on the screener about honesty. For example, if you’re testing an ecommerce site, you can state that a substantial part of the interview will involve being signed in to the site and referring to their purchase history page. If they don’t have a history spanning over 3 months, tell them they will be turned away without payment. This does sound a bit harsh, but it works.

To sum up, you face a real risk if you rely on your recruitment agency to take care of the screener behind the scenes. When engaging with a new agency, ask them what they do to screen out liars, and always be certain to review the final questionnaire before it gets deployed.

Do you have any other screener tips? Add them in the comments!

We don’t use external agencies for recruitment as we’ve had poor experiences with them. Real users are the bedrock of solid research – especially when using eye tracking as the eyes give everything away.

We rarely let the user know who the end client/website is before the research session, as they usually prep and learn how to use the site before hand – just as you would prep for a test – which means you’re not observing natural behaviour.

Pingback: Как отÑеÑÑ‚ÑŒ лгунов при рекрутинге реÑпондентов | Fresh: новоÑти мира юзабилити

Just be very careful in the choice of your bogus question, I once worked for a project where they used such bogus questions. One item in the questionnaire was supposed to be a bogus drug, but turned out to exist :-) (I searched for it thoroughly as I wasn’t informed and didn’t know the drug)

So there’s acutally a Java Framework called jwf, so be careful what you use as a bogus item.

See

http://sourceforge.net/projects/jfwprj/

@Roland – very true, and JFW is sometimes used to stand for “JAWS For Windows”, a screen reader (though not particularly often). So this example isn’t perfect…

I agree 100% that screening questions must be well constructed and “hide the qualifying answer among a number of decoy questions, or by asking open questions.”

An effective tactic I’ve used to avoid the fibbers is to ask the same question in different contexts: within the web-based screener and then during a follow up phone call. I typically pose two of these “test” questions to gauge honesty (they may or may not be pertinent to qualifying for the test).

For example, I find age to be the number one thing people “lie” about. Many times an initial web-based screener will ask for someone to specify their age range. You can follow up by phone and ask them their specific age and/or birthdate. That’s when they get “caught”.

It’s also good to ask at least one open-ended question to get a feel for their character and how they communicate. After all, they will be required to share and communicate a lot during a testing session.

If after the phone screening, their qualifications are at all questionable, I would eliminate them from the pool. If you’re lacking a large pool of qualified candidates, I would have a second phone conversation asking your test questions again. Repeat conversations / meetings generally are a good practice in general, from participant screening to conducting a job interview. Whenever character is in question, I find the third round of meetings/interviews to be telling.

I agree that open questions are really useful for getting a feel for the user.

The funniest response to a simple open question we’ve had was ‘none of your business!’

Why would you say that when applying to attend market research sessions – especially after investing time to fill out all the other details.

@Sandy, thanks for the age check tip. Agree totally about also testing their articulation. I sometimes use a question like “If you won £5000 in the lottery, what would you spend it on and why?” … if their answer is monosyllabic, you know they wont make good participants.

Good points Harry, but I’d steer clear of any market research-type outfits on recruitment. I’ve seen the other side too: once a market research recruiter has someone useful, that recruiter will keep on using them for everything, and they (the recruiter) can even lie themselves about that participant to keep things easy and sweet (e.g. lie about profession screened out).

And what I saw when doing ethnographic fieldwork in one such org (iSociety project, resulting in the Technology in the UK Workplace report) did not impress at all. Deeply flawed.

@Louise – I’m inclined to agree but I haven’t quite made the break yet.

In a recent study I did using one of “those” agencies, I recognized one of my participants from a session I happened to oversee at a company across town a few days previously. My screener had specified strictly no people who had attended market research in the past 6 months. I confronted the recruitment agency about it and they said they had to bend the screener in order to fill my quota (without warning me). No apology, no refund. That kind of attitude makes me really angry!

How do you source your users, then?

@Roland. Agreed. JFW is not a made-up acronym. Still, I probably wouldn’t want a job at an IT company where they didn’t know about Google.

“JFW is a Java framework and library that simplifies the creation of web based Java applications. ”

http://sourceforge.net/projects/jfwprj/

Pingback: links for 2009-09-26 « burningCat

Pingback: Some bookmarks added by Alex Horstmann | BlobFisk.com

Pingback: Screening Out Liars From Your Usability Study | Remote Usability

Since we recruit users live from the web for our remote research studies, we’ve also found that fakers can be an even bigger problem than the typical pre-scheduled study. Occasionally when people catch wind of a paid survey offer, they like to post it on “bargain hunting sites†like FatWallet. Here are some ways we deal with them:

—We always include an open-ended question to test people’s motives for coming to the site. If someone responds to the question “Why did you come to the site today?†with a vague answer like “To check the offerings†or “Just looking aroundâ€, consider that a yellow flag, and follow up with more specific interview questions.

—When we get a sudden surge of recruits, we check the referrer data in our recruiting tool (Ethnio) to confirm where users are coming from.

—The blunt honesty approach you mention in the post also works for us: making it clear that they won’t receive an incentive if it’s discovered that they don’t qualify for the study. Sometimes we’ve added questions like “Have you been completely honest in filling out this survey?” And you’d be surprised how many people say No.

—Live recruiting from the web is a big help for dealing with fakers, because on top of screening the recruits, you can also dismiss fakers with impunity, because there are always more valid recruits coming in. This is a benefit to remote user research methods in general: since users don’t have to travel out anywhere and there’s no advance scheduling, the stakes of dismissing an unqualified user are lower.

We talk about live recruiting on our website here: http://boltpeters.com/services/recruiting/

And we also go more in-depth on screening recruits in Chapter 3 of our Remote Research book: http://www.rosenfeldmedia.com/books/remote-research/

Tony, I’m looking forward to getting a copy of your book when it comes out.