Ok, so I’m following up last week’s post on Maxymiser’s MVT research. The findings are revealed below, and a few of you got your predictions wrong! It just goes to show that when you are putting the final stages of polish on live page designs (i.e. sweating over 0.1% improvements in your conversion rates), multivariate testing is a really useful method that can yield quite unexpected findings.

If you didn’t read my previous post, you may want to take quick look and read the comments. Put briefly, Maxymiser recently released the findings from a multivariate testing project. Multivariate testing is, in case you don’t know, a method that allows you to test different combinations of page designs on your live user-base, and select the most effective by monitoring your analytics metrics. The method has very good ecological validity as you know for sure that you are measuring the behaviour of your real users in their natural environment. In fact, they don’t even know they are being researched, making it almost the polar opposite of traditional lab-based usability testing.

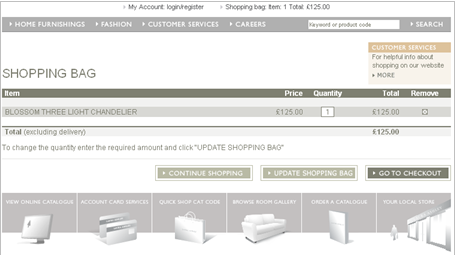

In this study, Maximiser tested 5 different versions of the shopping basket page for the Laura Ashley online store. Bear in mind that the percentages shown below are percentage uplifts on the conversion rate, rather than the conversion rate itself. For example, if you had a 10% conversion rate and then had an uplift of 9%, you’d end up with a conversion rate of 10.9%.

First place with an 11.02% uplift on the default: Version 3:

Second place with a 3.88% uplift on the default: Version 5:

The default design (with 0% uplift): Version 1:

Fourth place with a 1.92% downlift: Version 2:

Fifth place with a 7.85% downlift: Version 4:

and the Margin of error was?

A big caveat that you don’t mention is that this sort of testing only works on high volume sites. To get a significant result each variant would need many exits. This depends on the uplift of each variant. This is to make sure that you are not measuring noise instead of real behaviour. The test also needs to be done over a short period of time to make sure that your results are not influenced by seasonal factors.

The other negative is that user can be faced with a changing user interface. If I log in from home and the checkout button has moved around you may lose the sale.

This method of testing is great for working out very small changes on very large volume sites.

Yes, I didn’t really start talking about the drawbacks of the method – but like anything else it has its strengths and weaknesses. If you have lots of combinations you want to test, this creates a combinatorial explosion and can take months to get enough data, even from a fairly high volume site.

Also, it’s clearly a useless method if you don’t have a live site with a healthy user-base.

That’s really interesting. I couldn’t have been more wrong. There’s not a huge difference between the best and the worst. An extra button and some blurb about delivery. Perhaps the delivery blurb was a bit off-putting?

:-)

It is facinating to see the results. Can anyone explain the difference in performance between Version 5 and Version 4?

If you want to see some larger screen grabs, click here.

The 1st comment is correct. What is the margin of error?

I was considering this myself, so I had a look at the pages in the process.

You need to provide lots of information before you see the delivery costs.

I’d be interested in seeing how a page worked just like version 3 but with an intelligent/dynamic and simple provision of delivery costs up front.

There are two factors I’m really interested in.

Is it the complexity of the delivery costs that causes the problem. Or is it the fact that they are introduced so early in the process?

If the delay of delivery costs were to cause an uplift in conversion then that really would be interesting.

I know, I should get out more.

Hi guys,

To address some of the points that James raises at the top of the thread, the Maxymiser system monitors statistical chance to beat all percentages to ensure that statistical validity is reached before we draw conclusions.

We also identify new vs returning visitors so that a returning visitor always sees the same variant – important for usability and the stats.

We can further test changes on multiple pages, something many of our competitors struggle with, making multi page form testing possible.

Delivery cost is a tricky one, you’re right on that point. I believe in this particular test that the client was interested in discovering the point at which this should be presented in order to minimise exit rates. Multivariate testing can be used progressively alongside funnel analysis to push more traffic through the funnel one page at a time.

Feel free to contact me privately or follow up here with any questions, I will monitor this page for a few weeks.

Kind regards,

Alasdair (Maxymiser)

I disagree Pedro. Maxymiser works on lives sites, not on development ones. It’s also not a recruited sample. It’s real customers buying real products.

All versions run concurrently, so seasonal variations are not an influence either as far as I’m aware.

Oh, there was me leaping to your defence and you’d gone and done it already :0)

Did you measure conversion/sales rate and/or click thru rate? If only conversion rate, how can you tell that any individual version was really more successful in purchasing(as the checkout funnel is a multiple step process which isn’t displayed in the test)? I’d be interested to find out more about how these numbers were determined.

Additionally, what statistical significance did you reach? 90%? 95%? 98%? 99.9%?

Zachary,

you are asking all the right questions. This wasn’t my own study – I am just reblogging work done by Maxymiser.

There are more details about the study in previous post.

Maxymiser assure me that the study reached P<0.05 i.e. 95% confidence level in this study.

The conversion measured was clickthroughs into the checkout process.

Zachary I spoke to Alasdair from Maxymiser on your first point. Apprently his client only allowed a little bit of the results to be used in publicity so it was just clickthroughs. My own concern was that version 4 was the only one that wouldn't introduce a hidden cost later on in the process.

I can only guess that it version 3 was the best in terms of conversion because this is the page they have on their live site now.